Note

Click here to download the full example code

Time domain averaging: Atmospheric tides¶

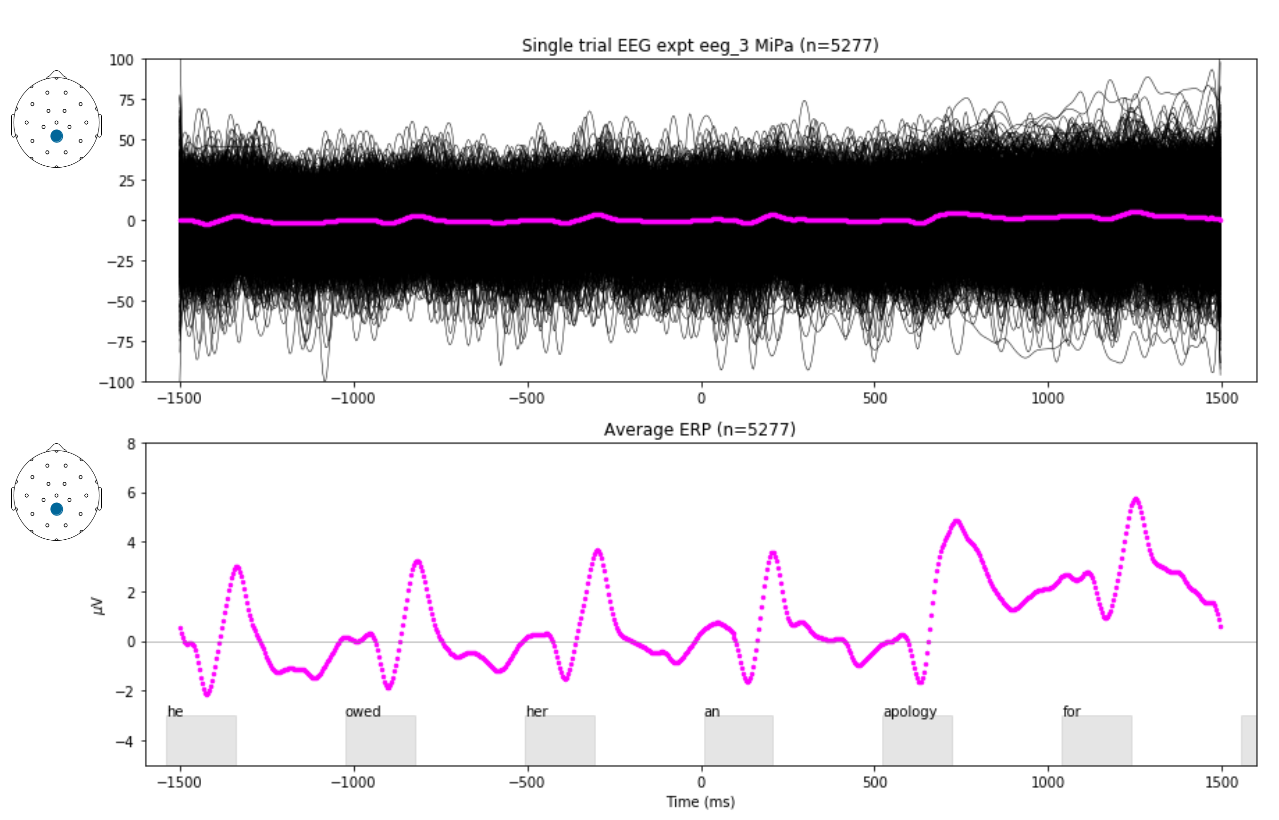

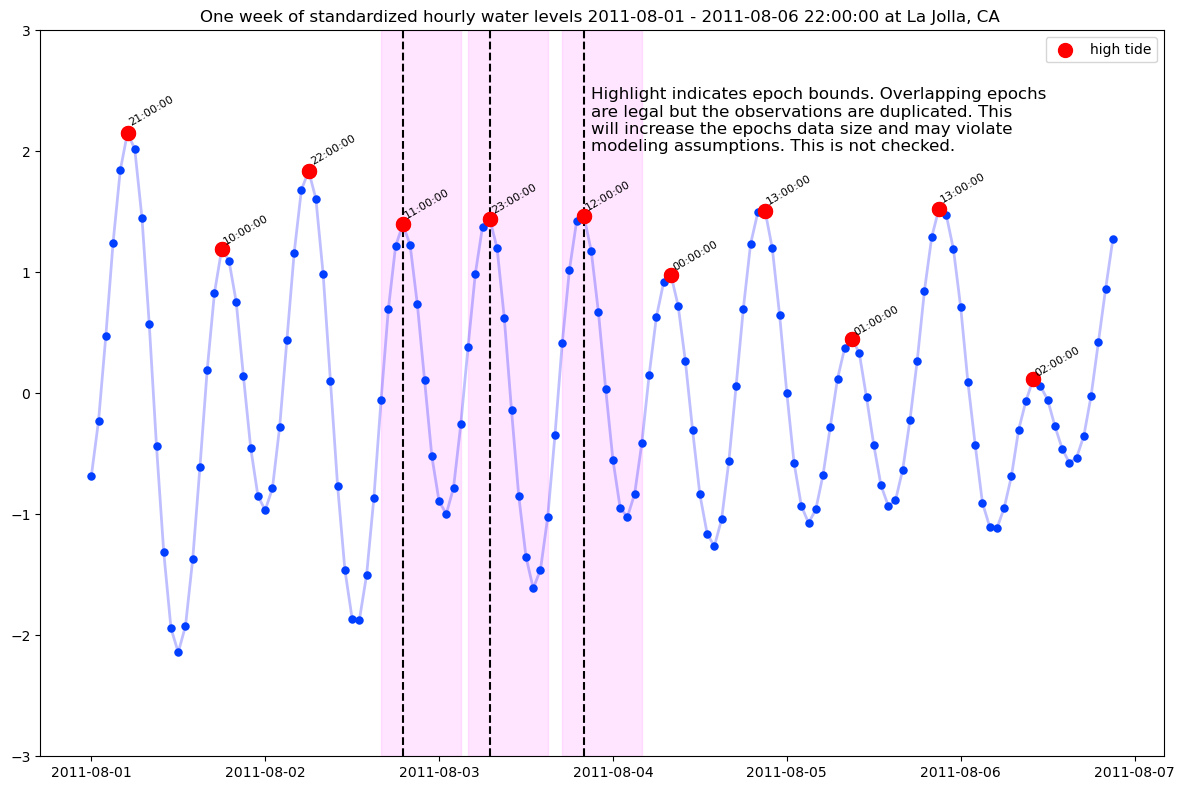

Dawson introduced the summation procedure for detecting tiny but regular time-varying brain responses to stimulation embedded in the irregular fluctuations of background EEG ([Dawson1954]). He noted that the idea of aggregating noisy measurements was already well known and pointed to Laplace’s early 19th century attempt to detect tiny but regular lunar gravitational pressure tides in the atmosphere, embedded in the larger fluctuations of barometric pressure driven by other factors, e.g., solar warming, weather systems, seasonal variation.

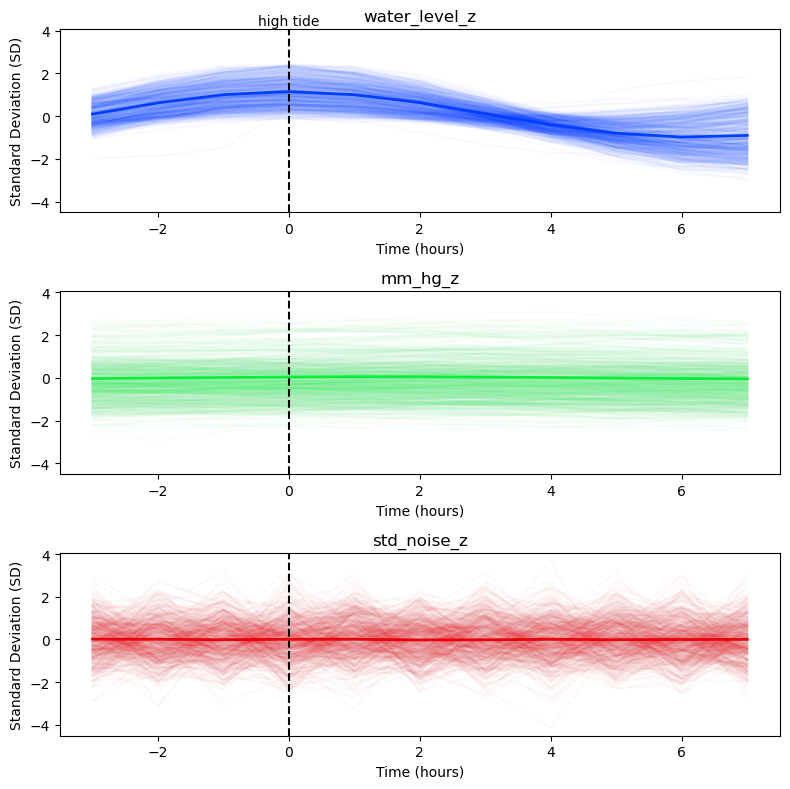

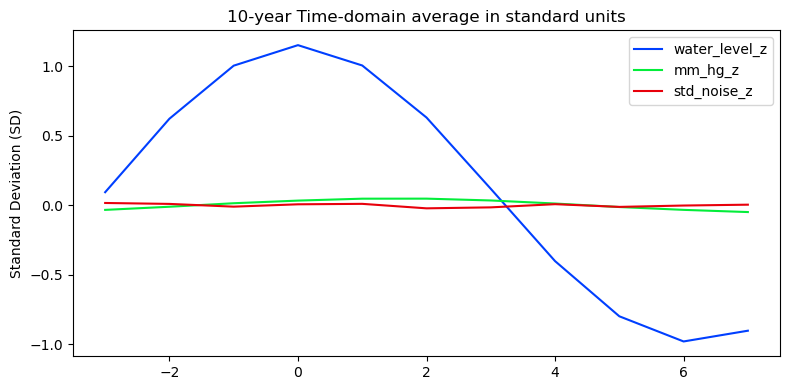

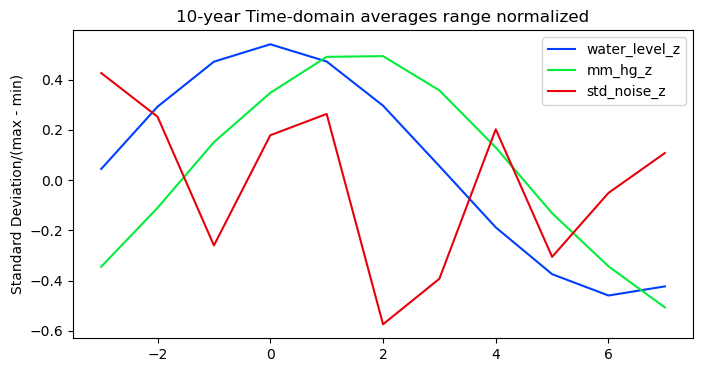

The subject of atmospheric lunar tides is complex and has a rich scientific history ([LinCha1969]). This example it is not a serious model of the phenomenon, but rather an illustration of how event-related regression modeling, in this case simple averaging, can “see through” large unsystematic variation and find systematic time-varying patterns.

Data are from NOAA Station La Jolla, CA 94102 (Scripps) August 1, 2010 - July 1, 2020 sea level and meteorology data, downloaded January 29, 2020 from https://tidesandcurrents.noaa.gov. Water levels are measured relative mean sea level (MSL). For more on how the epochs data were prepared see NOAA tides and weather epochs.

from pathlib import Path

import numpy as np

import pandas as pd

from pandas.plotting import register_matplotlib_converters

from matplotlib import pyplot as plt

import fitgrid as fg

from fitgrid import DATA_DIR

# set up plotting

plt.style.use("seaborn-bright")

rc_colors = plt.rcParams['axes.prop_cycle'].by_key()['color']

# supress datetime FutureWarning (ugh)

# register_matplotlib_converters()

# path to hourly water level and meteorology data

WDIR = DATA_DIR / "CO-OPS_9410230"

data = pd.read_hdf(DATA_DIR / "CO-OPS_9410230.h5", key="data")

epochs_df = pd.read_hdf(DATA_DIR / "CO-OPS_9410230.h5", key="epochs_df")

epochs_df.drop(columns=["air_temp", "air_temp_z"], inplace=True)

# compute epochs time domain average

epochs_tda = epochs_df.groupby('time').mean().reset_index('time')